Categories: CarmupediaData

Date: February 21st, 2023

How ChatGPT work

Summary

What is generative AI?

Generative artificial intelligence (AI) is a kind of machine learning and describes algorithms (such as ChatGPT) that can be used to create new content, including audio, code, images, text, simulations, and videos. Recent new breakthroughs in the field have the potential to drastically change the way we approach content creation.

What are ChatGPT and DALL-E?

ChatGPT and DALL–E are two of the most advanced open–source natural language processing (NLP) models

developed by OpenAIAI – artificial intelligence More. ChatGPT is a text–generating model based on the GPT–2 model, which can generate conversation responses in any language. DALL–E is an image–generating model based on the GPT–2 model, which can generate images from text descriptions. Both models have the potential to revolutionize natural language processing and artificial intelligence.

Did anything in that paragraph seem off to you? Maybe not. The grammar is perfect, the tone works, and the narrative flows.

That’s why ChatGPT—the GPT stands for generative pretrained transformer—is receiving so much attention at the moment. It’s a free chatbot that can generate an answer to almost any question it’s asked. Developed by OpenAI, and released for testing to the general public in November 2022, it’s already considered the best AI chatbot ever.

GPT-2 translates text, answers questions, summarizes passages,and generates text output on a level that, while sometimes indistinguishable from that of humans. Also ChatGPT is producing computer code, college-level essays, poems, and even halfway-decent jokes. The present version can become sometimes repetitive or nonsensical when generating long passages.

What kinds of problems can a generative AI model solve?

Generative AIAI – artificial intelligence More tools can produce a wide variety of credible writing in seconds. This has implications for a wide variety of industries, from IT and software organizations that can benefit from the largely correct code to organizations in need of marketing copy. In short, any organization that needs to produce clear written materials potentially stands to benefit.

Organizations can also use generative AI to create more technical materials, such as higher-resolution versions of medical images.

What are the limitations of AI models? How can these potentially be overcome?

The outputs generative AIAI – artificial intelligence More models produce may often sound extremely convincing. This is by design. But sometimes the information they generate is just plain wrong. Organizations that rely on generative AIAI – artificial intelligence More models should reckon with reputational and legal risks involved in unintentionally publishing biased, offensive, or copyrighted content.

These risks can be mitigated, however, in a few ways.

For one, it’s crucial to carefully select the initial data used to train these models to avoid including toxic or biased content.

Next, rather than using an off-the-shelf generative AIAI – artificial intelligence More model, organizations could consider using smaller, specialized architectures. Organizations with more resources could also customize a general model based on their own data to fit their needs and minimize biases.

Organizations should also keep a human check in the loop and avoid using generative AIAI – artificial intelligence More models for critical decisions, such as those involving significant resources or human welfare.

It can’t be emphasized enough that this is a new field. The landscape of risks and opportunities is likely to change rapidly in coming weeks, months, and years. New use cases are being tested on a daily base, and new models are likely to be  developed in the coming years. As generative AIAI – artificial intelligence More becomes increasingly, and seamlessly, incorporated into business, society, and our personal lives, we can also expect a new regulatory climate to take shape. As organizations begin experimenting—and creating value—with these tools, leaders will do well to keep a finger on the pulse of regulation and risk

developed in the coming years. As generative AIAI – artificial intelligence More becomes increasingly, and seamlessly, incorporated into business, society, and our personal lives, we can also expect a new regulatory climate to take shape. As organizations begin experimenting—and creating value—with these tools, leaders will do well to keep a finger on the pulse of regulation and risk

| But it’s clear that generative AIAI – artificial intelligence More tools like ChatGPTChatGPT—GPT stands for generative pretrained transformer. ChatGPT is a text-generating model based on the GPT-2 model, which can generate conversation responses in any language. More have the potential to change how the overall life will be performed. |

Details

What’s the difference between machine learning and artificial intelligence?

Artificial intelligence is pretty much just what it sounds like—the practice of getting machines to mimic human intelligence to perform tasks. You’ve probably interacted with AI even if you don’t realize it—voice assistants like Siri and Alexa are founded on AI technology, as are customer service chatbots that pop up to help you navigate websites.

Artificial intelligence is pretty much just what it sounds like—the practice of getting machines to mimic human intelligence to perform tasks. You’ve probably interacted with AI even if you don’t realize it—voice assistants like Siri and Alexa are founded on AI technology, as are customer service chatbots that pop up to help you navigate websites.

Machine learning is a type of artificial intelligence. Through machine learning there will be developed artificial intelligence through models that can “learn” from data patterns.

Neural networks

An early concept of artificial intelligence, connectionism, sought to generate intelligent behaviour through artificial neural networks. This involved simulating the behaviour of neurons in biological brains.

Neural networks are enabled to classify different inputs (i.e. sort them into different categories) through a process known as “learning”. Backpropagation, a supervised algorithm, efficiently computes “gradients”, i.e. vector fields that describe the optimal fit of all weights in the entire network for a given input/output example. The use of these gradients to train neural networks enabled the creation of much more complex systems, and in the 1980s neural networks were widely used for natural language processing.

Deep Learning

used internal representations of incoming data in neural networks with hidden layers to create the basis for recurrent neural networks. Due to their ability to process sequential information, recurrent neural networks have seen use in many NLP applications.

Training

GPT-1 was trained with 4,5GB of text, based on 7000 unpublished books of various genres. It results in 0,12 billiion paramters in a 12-level architecture.

GPT-2 was trained with 40GB of text, based on 8 million documents from 45 million webpages upvoted on RedditReddit is a network of communities where people can dive into their interests, hobbies and passions. More. It results in 1,5 billiion paramters in a 12-level architecture.

GPT-3, the upcoming version, was trained with 570 GB of plain text and 0.4 trillion tokens. Mostly from CommonCrawl, WebText, English Wikipedia and two books corpora. It results in 175 billiion paramters in a 12-level architecture.

GPT-2 was created as a direct scale-up of GPT, with both its parameter count and dataset size increased by a factor of 10.

Both are unsupervised transformer models trained to generate text by predicting the next word in a sequence of tokens. The GPT-2 model has 1.5 billion parameters, and was trained on a dataset of 8 million web pages.

While GPT-2 was reinforced on very simple criteria (interpreting a sequence of words in a text sample and predicting the most likely next word), it produces full sentences and paragraphs by continuing to predict additional words, generating fully comprehensible (and semantically meaningful) statements in natural language. Notably, GPT-2 was evaluated on its performance on tasks in a zero-shot setting.

Architecture

GPT’s architecture itself is a twelve-layer decoder-only transformer, using twelve masked self-attention heads, with 64 dimensional states each (for a total of 768). The learning rate was increased linearly from zero over the first 2,000 updates, to a maximum of 2.5×10−4, and annealed to 0 using a cosine schedule.

On natural language inference (also known as textual entailment) tasks, models are evaluated on their ability to interpret pairs of sentences from various datasets and classify the relationship between them as “entailment”, “contradiction” or “neutral”.

Another task, semantic similarity (or paraphrase detection), assesses whether a model can predict whether two sentences are paraphrases of one another.

Usage of AI

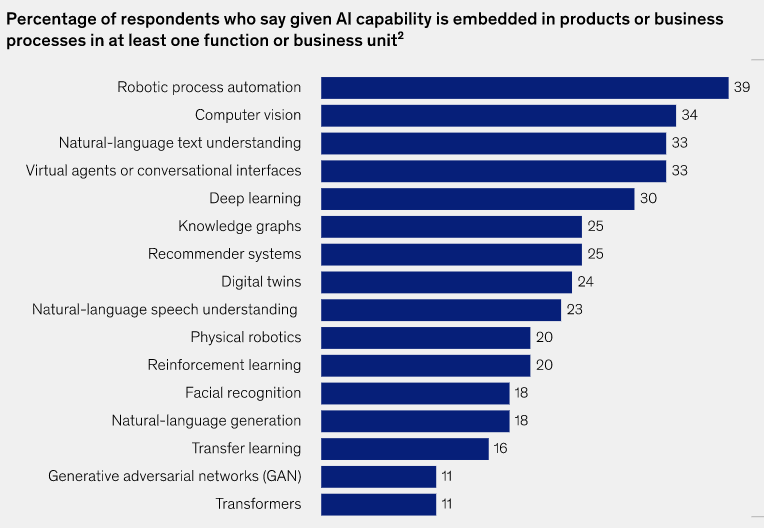

Meanwhile, the average number of AIAI – artificial intelligence More capabilities that organizations use increase . Among these capabilities, robotic  process automation and computer vision have remained the most commonly deployed each year.

process automation and computer vision have remained the most commonly deployed each year.

Also the level of investment in AIAI – artificial intelligence More has increased alongside its rising adoption.

In addition the specific areas in which companies see value from AIAI – artificial intelligence More have evolved. From manufacturing and risk were the two functions in which the largest shares of respondents reported earlier, today, the biggest reported revenue effects are found in marketing and sales, product and service development, and strategy and corporate finance, the highest cost benefits from AIAI – artificial intelligence More are expected in supply chain management.

Sources

- Wikepedia

- McKinsey-report

Written by Carmupedia Editorial Office

You might also be interested in

Data management in the development of autonomous driving functions

Confinity-X: German automotive industry launches data sovereignty over value chain

Overall, the topic of electromobility is clearly gaining momentum. This disruptive topic dominates the market with many aspects and effects for us and our customers.

The EU wants to address the issue of exhaust emissions comprehensively one last time, that much is certain. It is to become the final emissions standard for internal combustion engines before electric cars gradually take over. What's new?

This interesting talk, presented at a FIGIEFA members meeting by Roland Berger and at the Clepa annual meeting 2022. Roland Berger takes the approach that there are five to-do's for the path to data monetization and describes them at the end of the presentation.