What’s the difference between machine learning and artificial intelligence?

Artificial intelligence is pretty much just what it sounds like—the practice of getting machines to mimic human intelligence to perform tasks. You’ve probably interacted with AI even if you don’t realize it—voice assistants like Siri and Alexa are founded on AI technology, as are customer service chatbots that pop up to help you navigate websites.

Artificial intelligence is pretty much just what it sounds like—the practice of getting machines to mimic human intelligence to perform tasks. You’ve probably interacted with AI even if you don’t realize it—voice assistants like Siri and Alexa are founded on AI technology, as are customer service chatbots that pop up to help you navigate websites.

Machine learning is a type of artificial intelligence. Through machine learning there will be developed artificial intelligence through models that can “learn” from data patterns.

Neural networks

An early concept of artificial intelligence, connectionism, sought to generate intelligent behaviour through artificial neural networks. This involved simulating the behaviour of neurons in biological brains.

Neural networks are enabled to classify different inputs (i.e. sort them into different categories) through a process known as “learning”. Backpropagation, a supervised algorithm, efficiently computes “gradients”, i.e. vector fields that describe the optimal fit of all weights in the entire network for a given input/output example. The use of these gradients to train neural networks enabled the creation of much more complex systems, and in the 1980s neural networks were widely used for natural language processing.

Deep Learning

used internal representations of incoming data in neural networks with hidden layers to create the basis for recurrent neural networks. Due to their ability to process sequential information, recurrent neural networks have seen use in many NLP applications.

Training

GPT-1 was trained with 4,5GB of text, based on 7000 unpublished books of various genres. It results in 0,12 billiion paramters in a 12-level architecture.

GPT-2 was trained with 40GB of text, based on 8 million documents from 45 million webpages upvoted on RedditReddit is a network of communities where people can dive into their interests, hobbies and passions. More. It results in 1,5 billiion paramters in a 12-level architecture.

GPT-3, the upcoming version, was trained with 570 GB of plain text and 0.4 trillion tokens. Mostly from CommonCrawl, WebText, English Wikipedia and two books corpora. It results in 175 billiion paramters in a 12-level architecture.

GPT-2 was created as a direct scale-up of GPT, with both its parameter count and dataset size increased by a factor of 10.

Both are unsupervised transformer models trained to generate text by predicting the next word in a sequence of tokens. The GPT-2 model has 1.5 billion parameters, and was trained on a dataset of 8 million web pages.

While GPT-2 was reinforced on very simple criteria (interpreting a sequence of words in a text sample and predicting the most likely next word), it produces full sentences and paragraphs by continuing to predict additional words, generating fully comprehensible (and semantically meaningful) statements in natural language. Notably, GPT-2 was evaluated on its performance on tasks in a zero-shot setting.

Architecture

GPT’s architecture itself is a twelve-layer decoder-only transformer, using twelve masked self-attention heads, with 64 dimensional states each (for a total of 768). The learning rate was increased linearly from zero over the first 2,000 updates, to a maximum of 2.5×10−4, and annealed to 0 using a cosine schedule.

On natural language inference (also known as textual entailment) tasks, models are evaluated on their ability to interpret pairs of sentences from various datasets and classify the relationship between them as “entailment”, “contradiction” or “neutral”.

Another task, semantic similarity (or paraphrase detection), assesses whether a model can predict whether two sentences are paraphrases of one another.

Usage of AI

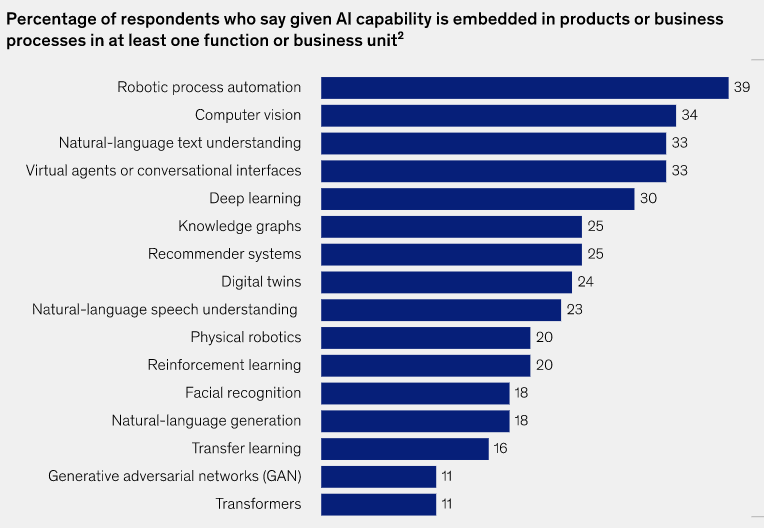

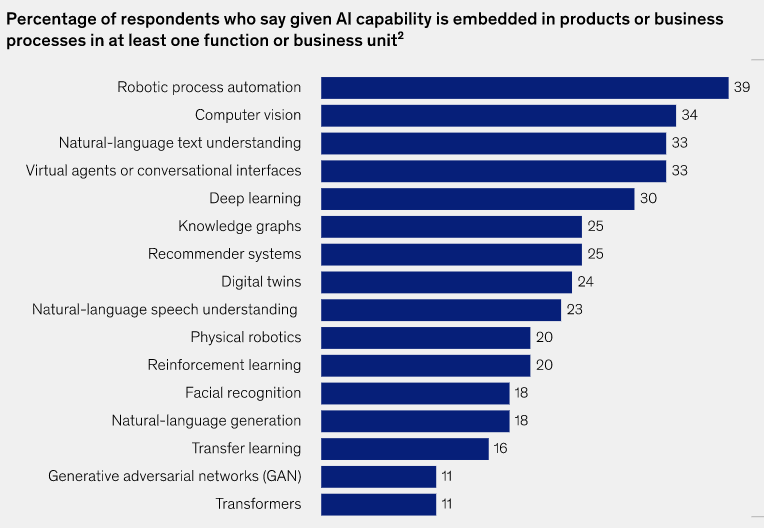

Meanwhile, the average number of AIAI – artificial intelligence More capabilities that organizations use increase . Among these capabilities, robotic  process automation and computer vision have remained the most commonly deployed each year.

process automation and computer vision have remained the most commonly deployed each year.

Also the level of investment in AIAI – artificial intelligence More has increased alongside its rising adoption.

In addition the specific areas in which companies see value from AIAI – artificial intelligence More have evolved. From manufacturing and risk were the two functions in which the largest shares of respondents reported earlier, today, the biggest reported revenue effects are found in marketing and sales, product and service development, and strategy and corporate finance, the highest cost benefits from AIAI – artificial intelligence More are expected in supply chain management.

developed

developed developed in the coming years. As generative

developed in the coming years. As generative  process automation and computer vision have remained the most commonly deployed each year.

process automation and computer vision have remained the most commonly deployed each year.